A/B Testing

This article will show you how to use an A/B test and describe its components, exemplifying through a set of Use Cases.

A/B testing compares two versions of either a webpage, email campaign, or an aspect in a scenario to evaluate which performs best. With the different variants shown to your customers, you can determine which version is the most effective with data-backed evidence.

Bloomreach Engagement offers A/B testing in scenarios, email campaigns, weblayers and experiments. A customer is assigned a variant that will be shown to them instantly when reaching an A/B split node in a scenario or matching the conditions for displaying a weblayer/experiment/email campaign.

Using A/B testing in Bloomreach Engagement

Using A/B testing in Bloomreach Engagement

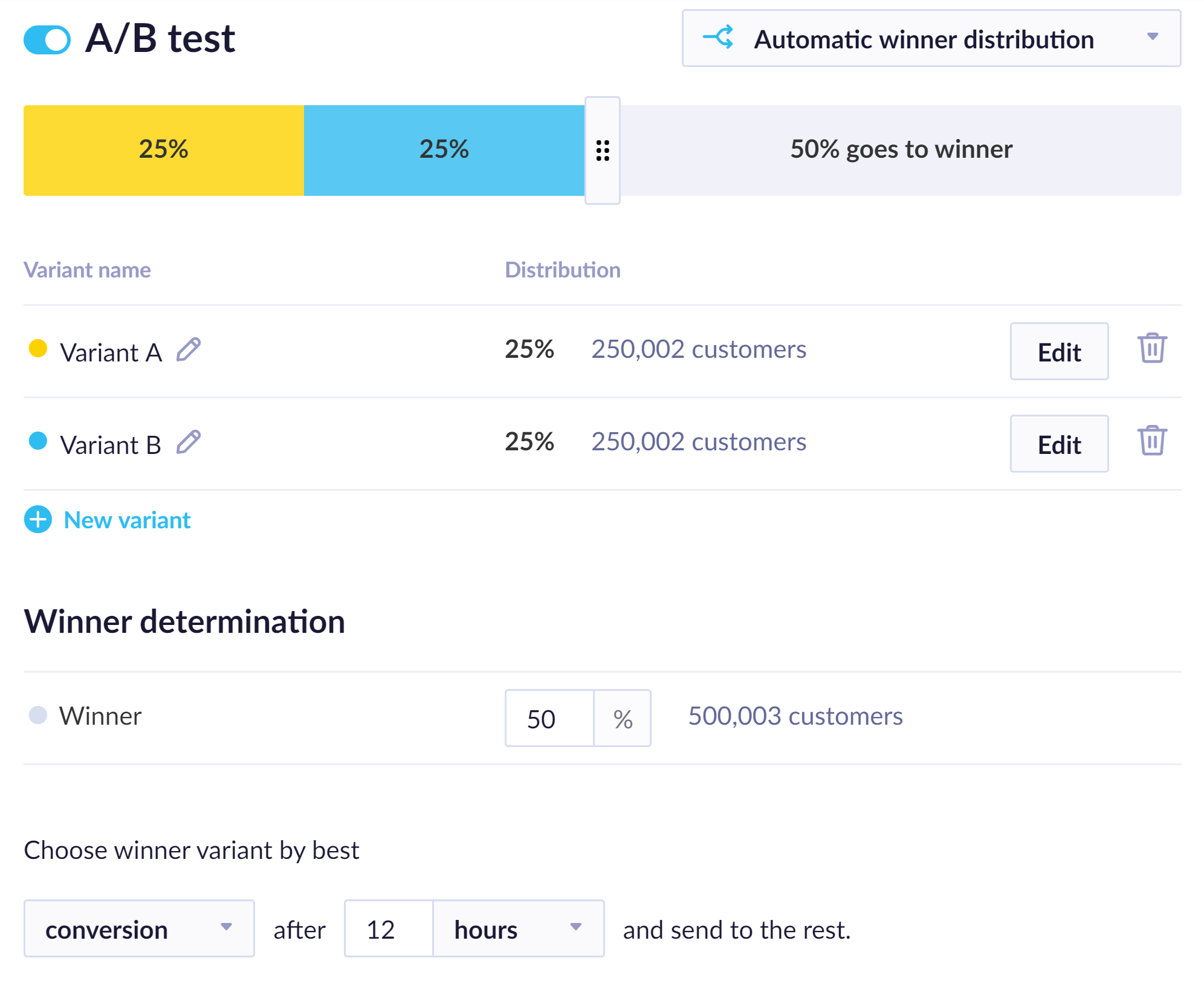

There are two types of A/B tests – Automatic winner/traffic distribution or Custom distribution. These allow you to either let Bloomreach Engagement choose the more effective variant, which will be automatically run for most of the audience, or you can manually specify the distribution for the probability of occurrence for each variant.

Automatic winner distribution

Automatic winner distribution

Automatic winner distribution is available in scenarios and email campaigns.

Automatic winner distribution tests your A/B test variants on a small share of your target audience, determines which variant is more effective, and then automatically sends only the winning variant to the rest of the audience.

For example, you can quickly test different subject lines or CTA buttons in your email or any other communication channel, including SMS, retargeting, webhooks, and others.

If the success rate of the two variants is equivalent, the remainder of the campaign will be sent in the original ratio.

The winning variant is chosen based on a metric and timeframe (usually hours) selected in the Winner determination. The metric can be conversion rate, click rate, open rate, or any custom metric (event). The winning variant is visible in the interface after the initial test.

Multilingual variants in Automatic winner distribution

When testing multilingual variants of your email campaign, the automatic winner distribution does not distinguish between them, and the performance of your email campaign as a whole is evaluated, regardless of its language version.

Automatic traffic distribution

Automatic traffic distribution

Automatic traffic distribution is available in experiments and weblayers.

Automatic traffic distribution tests variants and a share of your users. It recognizes which variant achieves the goal better. Then it shows the preferable variant to most of the audience. However, it continues to test the other variant on a small share of users. If it starts to perform better, the distribution will automatically be re-evaluated, and the other variant can become the preferable one.

Custom traffic distribution

Custom traffic distribution

Custom traffic distribution is available in scenarios, weblayers, experiments, and email campaigns.

Custom traffic distribution allows you to manually specify to what percentage of your total audience each variant will be shown. If you want to evaluate the success of your A/B test, you can either go to an auto-evaluation dashboard, or you can do it manually with the A/B test basic evaluation guide.

Calculating statistical significance

Calculating statistical significance

You can calculate the performance and statistical significance of your A/B test with our A/B Test Significance Calculator.

Control group in weblayers

Control group in weblayers

The control group, by default, does not show any variant. If there are any custom conditions specified in the JS code, it is important to create a custom control group that will have the same conditions despite the fact it doesn’t show an actual weblayer. This is important because otherwise, the compared groups would not be homogenous, and the evaluation would be inaccurate.

Auto-evaluation dashboard

Auto-evaluation dashboard

A/B tests also contain an auto-evaluation dashboard. In the report, there are events within the evaluation window, and in the uplifts they are compared to the average performance of all variants from the sample.

Technical notes about A/B tests

Technical notes about A/B tests

You will not be able to edit any variant that has already been visited by a customer.

- A customer is assigned the same variant when going through the same A/B split in the scenario.

- In weblayers, each customer is assigned the variant in the event “a/b test” and will have the same variant in subsequent visits.

- It is possible for a customer to be assigned to more variants if they visit the website from different browsers or devices. In that case, the system does not know it is the same customer until they are identified (through a login, for example) and hence can assign different variants on a visit.

- For correct A/B test evaluation, all groups should be identical in conditions except for the variant.

- Weblayers offer automatic A/B testing, which allows preference of the best-performing variant based on the chosen goal.

A/B test event attributes

You can read on

a/b testevent attributes in the System events article.

A/B test FAQ

See article A/B test FAQ for most common questions about A/B tests.

Example use cases for A/B testing

Example use cases for A/B testing

Time of sending

Time of sending

Identifying the best day and time to send campaigns can significantly boost your open rates. When tested, it can increase open rates by up to 20%. If you’re sending at 7 AM each morning, so are many other brands. What happens when we send it at lunchtime or after the working day? For each brand, the ideal send time is going to vary. However, it is a very impactful element to test.

With Bloomreach Engagement, you can personalize email send times for every customer with just one click. You can optimize predictions based on open rates or click-through rates according to your goals.

Ideal frequency

Ideal frequency

The amount of messages that you are sending to customers is also important. In Bloomreach Engagement, you can easily set up frequency management policies that determine how many messages a customer from a particular segment can get. By doing so, you can avoid spamming and overwhelming your customers. Test various frequency policies and see what works best for your brand. Remember, less is sometimes more!

Improving open email rates

Improving open email rates

The factors that impact open rates are pretty limited, so they are an excellent place to start. In essence, there are just four things to test with regard to open rates:

- Subject lines

- Pre-headers

- Sender name

- Time of sending

Subject lines:

Subject lines:

A good subject line can increase opens by as much as 15% to 30%. The role of the subject line is crucial. It’s about grabbing recipients’ attention in a jam-packed inbox with many other brands crying out, “Open me.” For this reason, subject lines are not subtle. In addition to grabbing attention, it needs to communicate the number 1 stand-out reason your customers should open this email.

Because we all mostly view emails on our mobile devices, the pre-header has become a very important part of email anatomy. In our inbox view, it sits under the subject line and acts almost like a secondary subject line. It can have two distinct purposes.

1. It can boost the impact of the subject line with an additional supporting message.

Subject Line: “Footwear lovers, get ready.”

Pre-header: “We’ve added over 100 gorgeous new shoes!”

2. It can add a secondary reason to open the email.

Subject Line: “Footwear lovers, get ready.”

Pre-header: “Plus, check out these statement dresses!”

If you have more than one content piece in the email, the second option can capture the interest of more recipients (which will drive more opens).

Sender Name:

Sender Name:

Slight amends to the sender’s name (not the email address) can be significant. The sender’s name is your normal brand name. However, specific brand name variants can drive up open rates when tested. You need to make sure we don’t confuse recipients, but variants of the sender name worth testing include:

“Brand X – New in”

“Brand X – Exclusives”

“Brand X – The Big Sale”

“Brand X – The Style Edit”

Improving content

Improving content

Beyond optimizing open rates, a good testing plan will also move toward the campaign’s content. Here there are many more factors to test. However, it is possible to increase click activity by 25% when you learn how to present your content. Some of the most impactful tests are:

- Buttons (CTAs) versus no buttons

- Links versus buttons

- “Shop new” versus other CTA vocabulary

- Price versus no price

- Stylized banners versus product photography

- Images with supporting text versus images only

- More products versus fewer products

- Recommendations versus no recommendations

- Using abandoned cart items in your newsletter versus not using them

- Browsed item category “New in” versus no browsed item category content

- Clearance items versus no clearance items (people still love bargains!)

Improving conversion rates

Improving conversion rates

Here are some ideas you can try to improve your conversion rates.

- Landing on the product page versus landing on the category page (please note the actual product clicked needs to be at the top left of the category view page)

- Adding delivery options on the product pages versus not adding delivery options on the product pages

- Category pages sorted by new in

- Category pages sorted by best sellers

A/B Testing Best Practices

A/B Testing Best Practices

- Make sure you’re following best practices regarding email deliverability. There won’t be much to test if your emails don’t make it to the inbox.

- Test one email element at a time. To test subject lines AND the use of pre-headers at the same time will not inform you which test element had the most impact.

- However, it is possible to come up with several testing concepts for one test element. E.g., with subject lines, you can test several at once. Or CTA language, again you can test several in one test.

- Be careful with winning subject lines, pre-headers, and even sender names in the long run. Overuse of these tends to erode performance over time.

- When setting up your A/B testing in Bloomreach Engagement, you need to set the functionality to focus on one metric only to identify the most successful campaign. So, for subject lines, that needs to be open rates. For campaign content ideas, that is most likely to be the click-through rate. However, it could also be conversion rates. When testing “price shown versus price not shown”, the price shown generated fewer clicks but higher conversions. However, testing results will vary for each brand. Test for your results to ensure the best outcome.

- You will need to allow a window of time to allow the test recipients to open and engage in the email before deploying the most successful campaign to the holdout group. The longer the window of time, the better the odds are that the campaign deployed is the best-performing one. Our recommendation is a 4-hour wait.

- To gain confidence in testing and its benefits, start with simple tests before doing more ambitious ones. The tests for opens are a great place to start.

- Plan your content tests for your content team to arrive at the test variants you want to run.