Apple Glass | 2025 Release, Features, Specs, Rumors

If you were to believe science fiction, the future of wearables has always been augmented reality. Apple could very well be close to achieving that future with a wearable heads-up display called “Apple Glass.”

Apple has a long way to go before it can release a sophisticated pair of glasses that display information on the lenses. They need to be lightweight and somewhat stylish while still being a computing device that relies upon battery power.

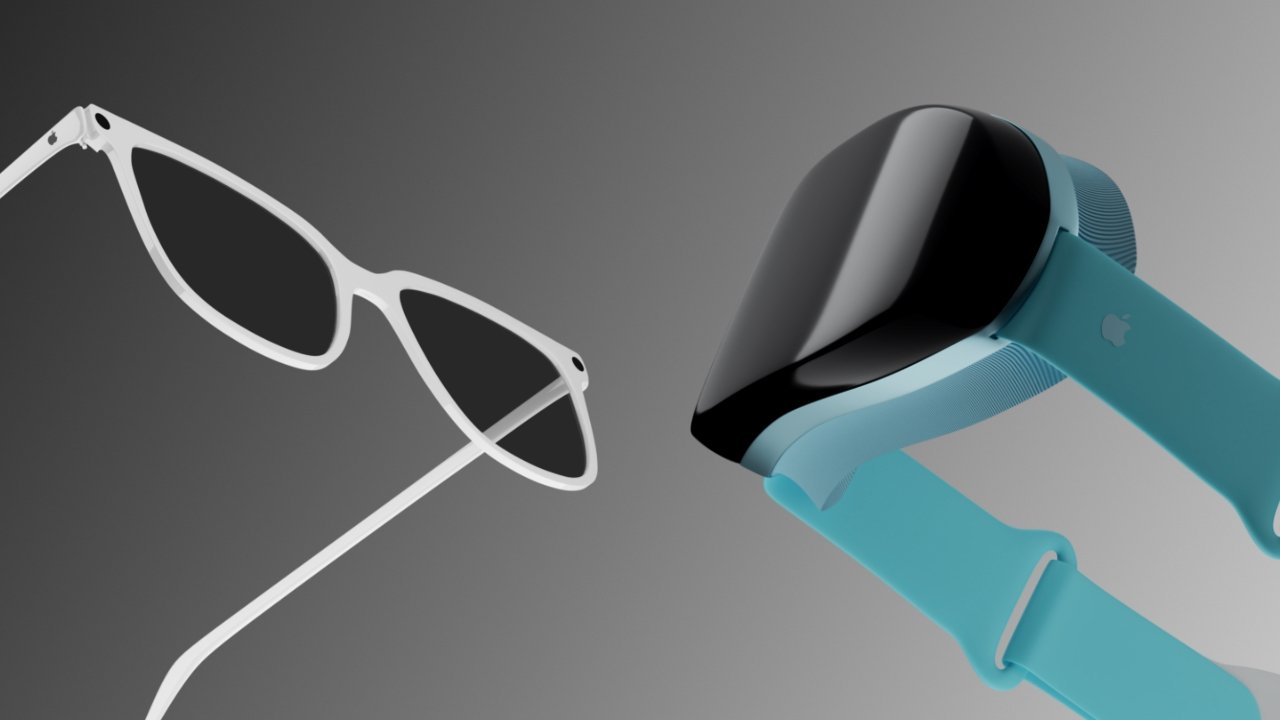

There will likely be a stepping stone device to get the technology and software ready in the form of a virtual reality headset. This bulky headset would be worn like a set of goggles and display information to the user inside a visor, obscuring the real world.

Developers would use this “Apple VR” headset to work on generating 3D objects and software that would ultimately be used in “Apple Glass.” This product development could take years, leading to a potential release sometime in 2025 or later.

Mục Lục

“Apple Glass” Rumored Features

There have been rumors about wearable AR glasses for years, but very few useful tidbits leaked until 2020. Patent applications have been the best source of information yet, and show some promising features for an Apple headset.

Apple Glass will likely follow the VR headset using similar technology stacks

The following features are a combination of rumors, patents, and leaks that represent our best look at what “Apple Glass” might be. Many of Apple’s competitors have begun making products that will compete with Apple’s eventual heads-up display, but none have reached the sophistication of the rumored Apple product.

Facebook, now Meta, hopes to be among the first companies to create a universal headset that brings AR and VR to the masses. No word has emerged from Apple beyond CEO Tim Cook’s continual praise of augmented reality and its future.

Design

There haven’t been leaked photos of the actual design, but it is rumored that Apple wants these glasses to look fashionable and approachable. Apple Watch is a good place to look for how Apple handles wearable design— subtle, but still obviously a piece of tech.

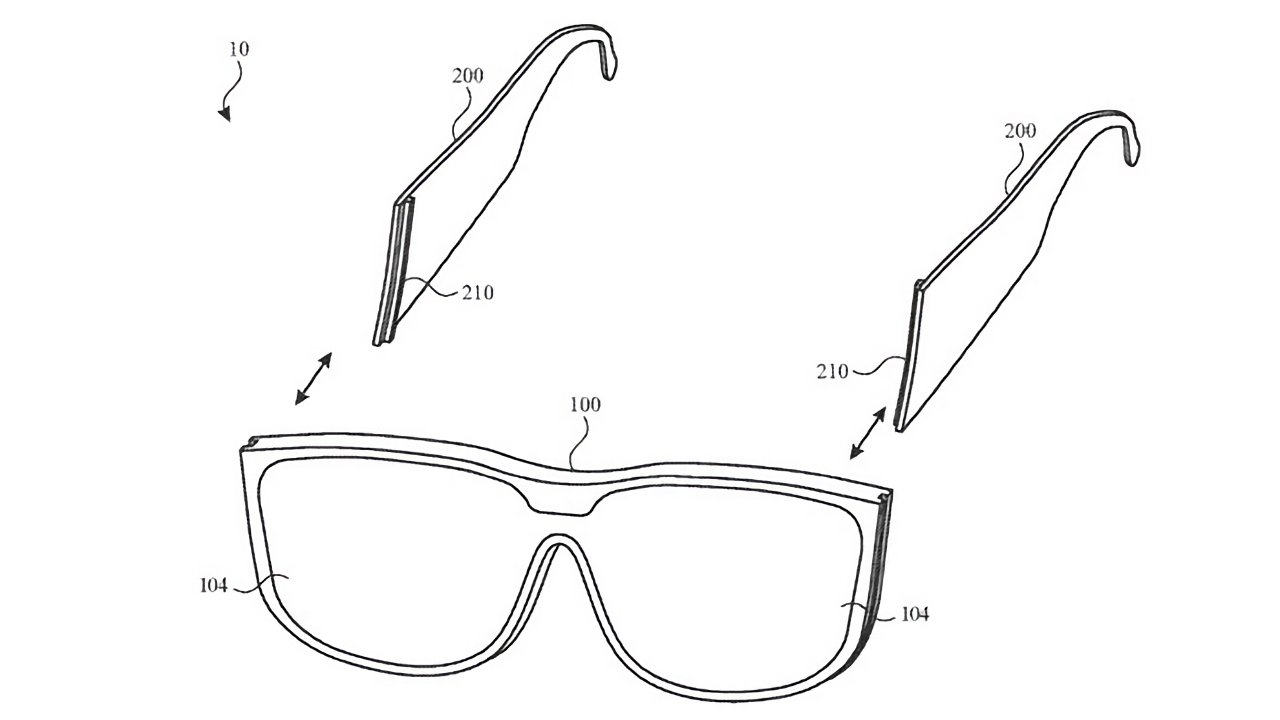

Much of what has been shown in patents look like safety glasses, though these are prototype drawings meant to illustrate the patent and not the product. Ultimately “Apple Glass” could look like an average pair of glasses, but there is no way of knowing until something more official leaks out.

Apple’s AR glasses may have modular parts

Designing a tech product that users will want to wear on their faces is no simple task. Style, color, and even lens shape will make or break most purchasing decisions, and Apple is a company known for a one-size-fits-all approach to many of its products.

Jon Prosser had seen the early prototypes of the glasses and called them “sleek” in a June 2020 leak. He estimated a 2021 release, although none were ever announced during that year.

A new rumor came from Prosser on May 21, when he said there would be a “heritage edition” set of glasses designed to look like the ones worn by Steve Jobs. Bloomberg’s Mark Gurman felt the need to step in and say that all rumors up to that point were false.

Gurman asserts that there are two distinct devices, as reported by AppleInsider over the years, one is the purported glasses, and the other a VR headset. Prosser agrees there are two devices but did not agree with Gurman’s lengthy release timeline of 2023 for the glasses.

Sony may be supplying half-inch Micro OLED displays for an Apple headset at 1280×960 resolution. The order for the displays is expected to be fulfilled by the first half of 2022, according to sources. These may likely be for the Apple VR headset, however.

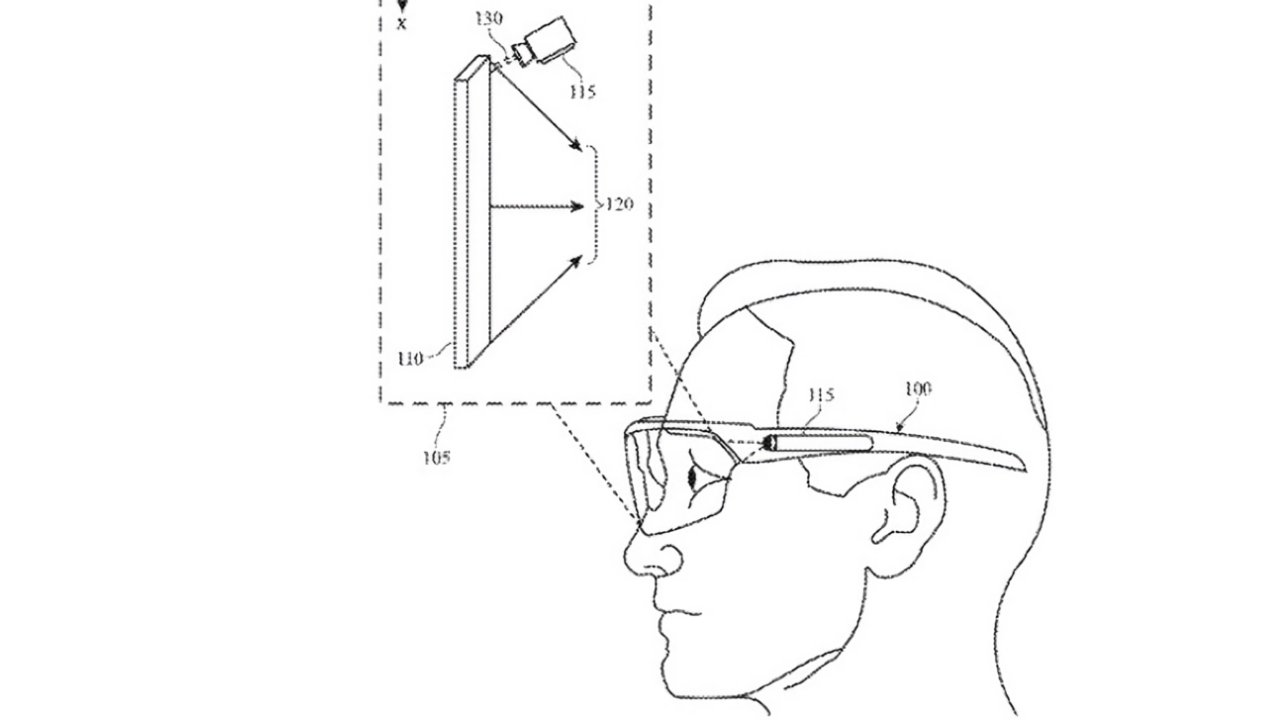

A patent revealed late in 2020 points to an Apple VR or AR headset automatically adjusting the lenses placed in front of the user’s eyes by using fluids to deform the shape of the lens to improve the user’s eyesight. The patent suggests a series of lens components around a central fluid chamber that can be inflated and emptied by a connected pump and reservoir.

Later rumors pointed to delays in manufacturing the Apple glasses. Analyst Ming-Chi Kuo says the “Apple Glass” may not be ready until 2025, with a set of AR contacts set for a 2030 launch.

Processing Capabilities and Battery Life

Wireless signals, smart displays, microphones, powerful processors, and LiDAR add up to a device in need of a big battery. If Apple wants a device that everyone wants to wear, it not only has to look good, it has to perform. A massive battery and hot processor just won’t cut it, so Apple will have to find a balance.

One aspect Apple can cut back on is processing power. As with the first-generation Apple Watch, the smart glasses could rely upon the iPhone for all processing needs and only display that information.

Pairing Apple’s smart glasses should be as simple as AirPods

By relaying information from the phone to the glasses, Apple will drastically cut down on local processing and need only worry about powering the display and sensors. Their rumored release date is in 2025, so the technology allowing a slim and light pair of “Apple Glass” could mature by then.

One patent filed by Apple shows a series of base stations and IR tracking devices that could be used to process data and transfer information to “Apple Glass” or a VR headset. These tracking tools being offloaded to a dedicated base station would allow better tracking and less battery usage for the wearable. Think of a museum being able to follow a user around and show relevant data in the glasses with the base stations doing all the work.

Jony Ive once stated that a product could be in development for years, waiting for the technology to catch up with the idea. Apple is likely to take the same approach here, developing the AR glasses into different iterations internally while the technology is allowed to mature.

Apple’s AirPods are a good example of a super compact device with good battery life. Even as small as the AirPods Pro, they last for several hours with ANC enabled. If the prototype models are “sleek,” like Prosser said, then Apple may have already solved their design problems surrounding battery life.

The glasses will need to serve the customer as a piece of tech and fashion, but will also need to serve a third function for many– work as actual glasses. It is expected that Apple will offer an option to order a prescription lens, but a patent describes another option. The lens themselves could adjust for the user wearing them.

Privacy and “Apple Glass”

Apple does not plan on including a camera in “Apple Glass” as the privacy, and social implications alone would be insurmountable. According to Jon Prosser, Apple will only have sensors like LiDAR on the frame, allowing environmental awareness and gestures without infringing on others’ privacy.

Apple likely to rely upon LiDAR and other sensors instead of cameras

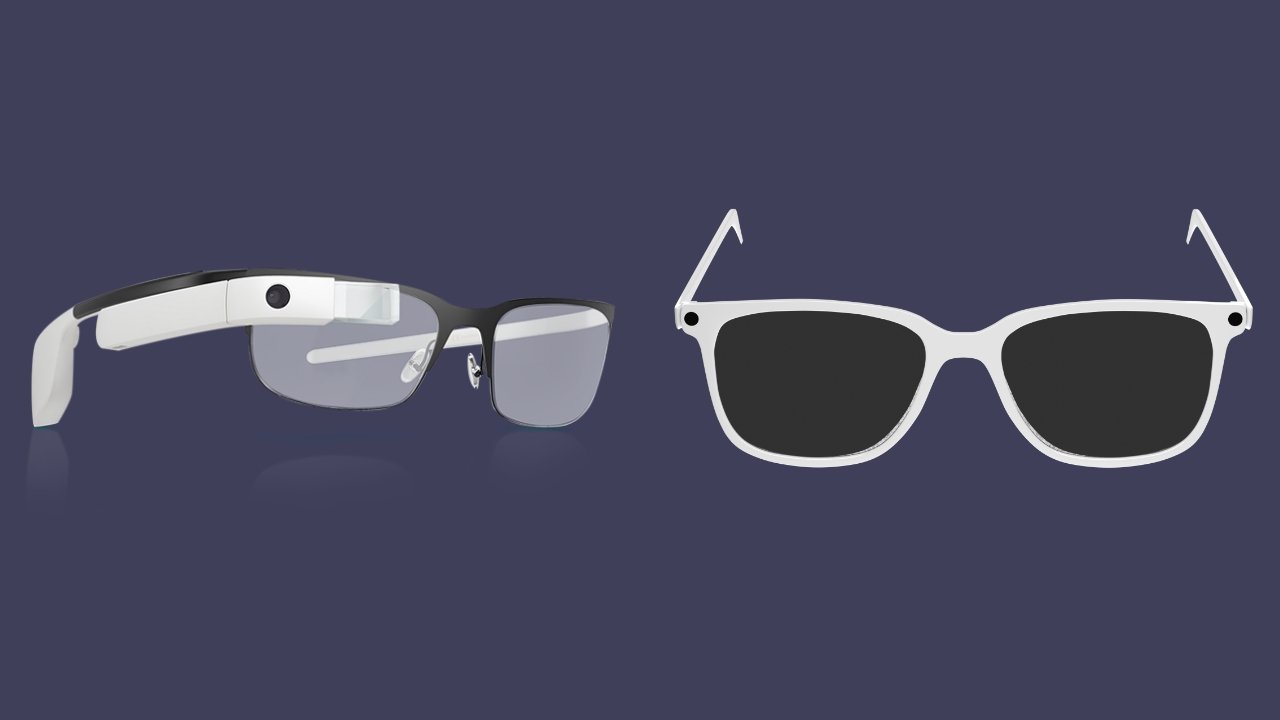

Apple tends to sell products with a lot of overlap, but the iPhone has always been the Apple camera, which isn’t likely to change. Unlike Google Glass, which seemed to want to replace the smartphone entirely, Apple’s product will augment the iPhone experience.

Another expectation is that only the wearer will be able to view the content on the glasses so that a random passerby cannot peek into your business.

Apple has also looked into using “Apple Glass” for authentication. Rather than utilizing the built-in biometrics on your iPhone, the headset could detect if the wearer is looking at the device and unlock it immediately. The feature would only work after authenticating the wearer when putting on the glasses for the first time, much like Apple Watch.

Starboard User Interface

The iPhone has Springboard, the set of icons that act as your home screen. Apple’s glasses have “Starboard.” No interface elements have leaked or even been described, but it is assumed that Apple will adapt its iconography and UI for an AR interface.

Code surrounding the testing of such a UI was found in the iOS 13 Release Candidate. STARTester code and references to a device that could be “worn or held” were found in a readme file. However, not much came from this but does corroborate the rumored “Starboard” UI name.

The LiDAR sensor will allow for gesture control without the need for a controller or marker. However, some patents have suggested that Apple might be making a controller for more interactive experiences, like games.

Google Glass (left) has a tech-focused style

As the first generation, expect most experiences to be passive. Look to Google Glass for this one; expect to see incoming iMessages, directions overlaid in real life, and highlighted points of interest.

While there won’t be a camera to guide these experiences, LiDAR plus geolocation, compass direction, head tilt, eye tracking, and other sensors would go a long way in ensuring accuracy when displaying AR objects.

The iPhone and Apple Watch could also act as anchors for AR interactions. Users looking through their AR glasses would be the only ones able to see what is being displayed, which would give them greater privacy.

Additional leaks point to a “realityOS” that could be used to power the augmented reality glasses. While code in iOS references this term, no other elements have been discovered.

Mark Gurman shared a rumor suggesting Apple could call the operating system xrOS for its AR and VR products. The “XR” in this case would mean “extended reality.”

A rumor suggests that Apple is building AR features for iMessage that could benefit future Apple headsets. It isn’t clear what kind of interactions this would introduce, but the initial release is apparently targeted for iOS 17.

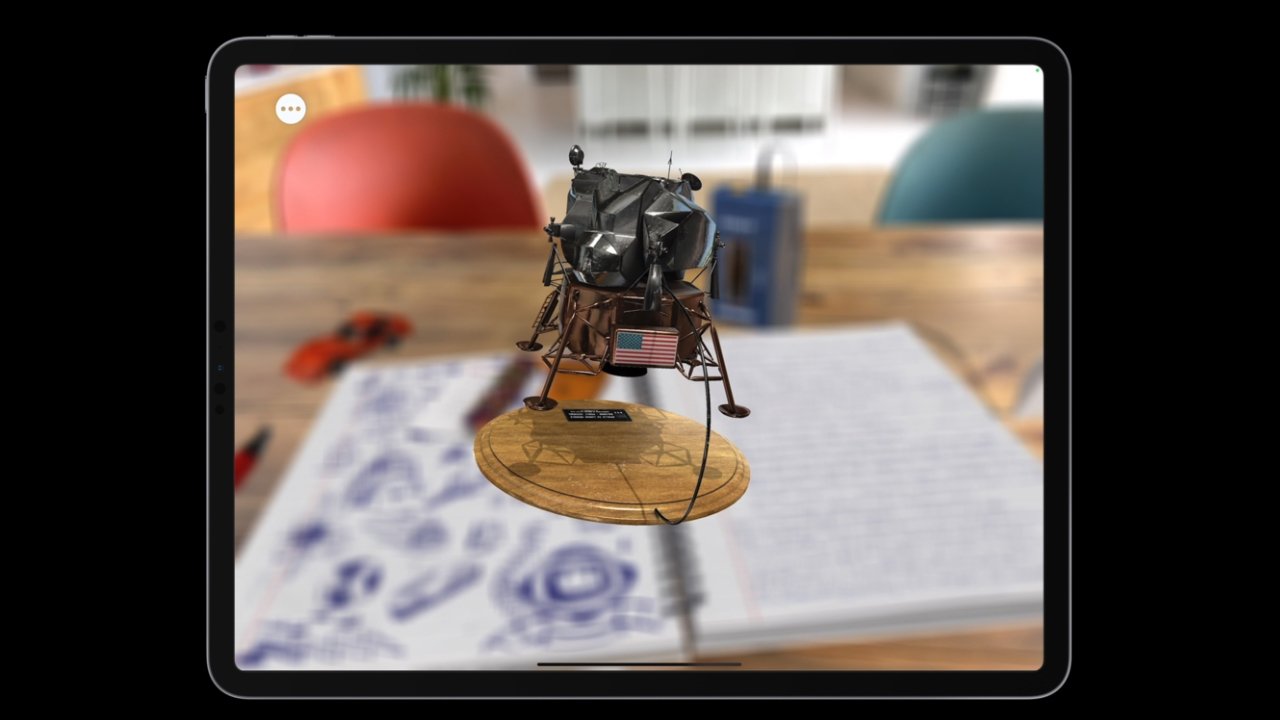

AR features in iOS show potential for “Apple Glass”

Apple has been pushing augmented reality for years and new features are added to ARKit with each version of iOS. These building blocks all add up to what could be an operating system and software features built for “Apple Glass.”

ARKit

Location anchors will let developers and users attach augmented reality objects to certain locations in the real world. Wearing an AR headset or set of AR glasses while walking around town would let you see these objects without further interaction. On-device machine learning will be able to anchor objects by using known map data and building shapes precisely.

LiDAR makes positioning objects without physical markers fast and easy

The new Depth API was created to take advantage of the LiDAR system on fourth-generation iPad Pros which could be included on “Apple Glass.” Using the depth data captured and specific anchor points, a user can create a 3D map of a location in seconds. This feature will allow more immersive AR experiences and give the application a better understanding of the environment for object placement and occlusion.

Face and hand tracking have also been added in ARKit, which will allow for more advanced AR games and filters that utilize body tracking. This could be used to translate sign language live or attach AR objects to a person for a game like laser tag.

App Clips

Apple announced the rumored App Clips and it aims to ease the friction of using some commerce apps out in the world.

App Clips use images like QR codes to reveal content, which could be useful for a wearable AR device

With iOS 14, users can tap an NFC sticker, click a link, or scan a special QR code to access a “Clip.” These App Clips are lightweight portions of an app, required to be less than 10MB, and will show up as a floating card on your device. From there, you can use Sign-in with Apple and Apple pay to complete a transaction in moments, all without downloading an app.

The specialized QR codes are the most notable here, as Apple could be scattering these around the world for use with App Clips on the iPhone now only to have them work with “Apple Glass” in the future. The premise would be the same in AR—walk up to a QR code and see an AR object you can interact with to purchase without downloading an entire app.

HomeKit Secure Video Face Recognition

HomeKit Secure Video, which was added to iOS in 2019, offers some smart features for users, such as recognizing objects that appear in videos for easier searching through footage. In iOS 14, the security feature will gain a face classification function, allowing it to identify individual people when they approach the camera.

HomeKit Secure Video face recognition using saved faces in Photos could be a precursor to AR glasses technology

Facial recognition data in the Photos app is used to make this function work, and Apple is applying it to people being recorded in real-time. While “Apple Glass” is not expected to have a camera, it will have LiDAR and other sensors that could create enough data to determine who a person is.

One of the earliest features users desired of an AR headset is instant face recognition to remind them of names or important information. This has wide-ranging privacy issues on its own, but if the data set is limited to what a user has on their iPhone, it is much more safe and useful.

“Apple Glass” Price and Release

The biggest leak about Apple’s potential wearable came on May 18, 2020. Jon Prosser said that the “Apple Glass” would be $499 plus the cost of prescription lenses. It is likely those lenses will need to be custom-made as well, so depending on insurance, customers could easily spend $1500 on the entire device.

Ming-Chi Kuo says that Apple’s AR glasses will be released after an Apple VR headset. The VR headset is due sometime in 2023 with the glasses coming by 2025. Kuo also stated Apple will release contact lenses with AR capabilities sometime in 2030 or later.

The first VR headset will pave the way for developers while a second generation headset is expected a year later in 2024. This second model would be lighter and have more consumer-focused abilities and be used for calls. Ultimately this would lead to a lightweight set of “Apple Glass” that could be worn for AR or MR experiences by 2025.