What is A/B testing? – Types, Process, How to Perform, Examples

Each visit is unique and how they interact with your site also varies as per their intent, expectation, and pain points. With A/B testing, you can understand which elements resonate most with a particular segment of your audience. Then, you can make changes as per the results making your visitors happy and delighted.

Here are some of the behind doing the A/B testing:

Or you might test two versions of ad copy and see which one visitors click more and convert.

For example, you can send two versions of an email to your email list (segmenting the list first) and figure out which version generates more sales. Then you can send out the winning version next time.

You can use A/B testing in different ways – landing pages, emails, and paid ad campaigns

You begin by deciding what you want to test, for instance, the size of the CTA button above the fold. Next, you must choose how you’ll evaluate its performance – track the click rate. Then, you’ll create two versions of a webpage, control, and variant, control- the original version, variant – changed version with the increased button size. Then you’ll show these two versions to two different sets of users and determine which version got the higher clicks.

A/B testing, or split testing, is a method of comparing two or more versions of a webpage or element to see which one performs better. By randomly showing different versions to segments of visitors, businesses can determine which option drives more engagement or conversions.

A/B testing eliminates the room for guesswork, thereby giving you reliable results. Read on to learn what to A/B test, how to perform A/B tests, and mistakes to avoid optimizing your A/B test results.

But which headline will leave a great first impression or which CTA button will compel them to click? You might let your intuition predict the answer or you can make a data-driven judgment using A/B testing.

The goal of marketers with each landing page paid ad campaign, or email marketing is to generate leads and improve their conversion rate. And to get such results, your copy, design, and offers need to grab users’ attention, and compel them to click.

Mục Lục

• Analyze your marketing budget

You can analyze where your marketing spending gets you the highest returns. You can A/B test your paid ad campaigns and save thousands by scrapping copy or design that does not generate clicks or leads. If you don’t test, you’ll never know.

• Help you develop data-driven insights

Leaving your website’s optimization to guesswork is like putting all your money on the most healthy horse while ignoring all the other factors that can influence its chances of winning. But, A/B testing is a data-driven approach that helps you test changes in a real-time environment where your visitors give you feedback. And as you are monitoring the changes, you get the statistically significant data – a way of mathematically proving that a certain statistic is reliable.

Related guide: A Guide to Conducting Website Audit For Higher Conversions

• Reduce bounce rate

No one likes to see users bounce off their website. Users can bounce off due to long signup forms, long checkout process, unclear call to action, etc. You can significantly remove the bounce rate and keep users engaged on your website by testing and improving.

With A/B testing, you can test different versions of your page to identify the one that reduces friction, resolve users’ pain points by keeping them on the site and compel them to convert.

Different types of A/B tests you can perform

There are three subtypes of A/B testing w=that you should know about:

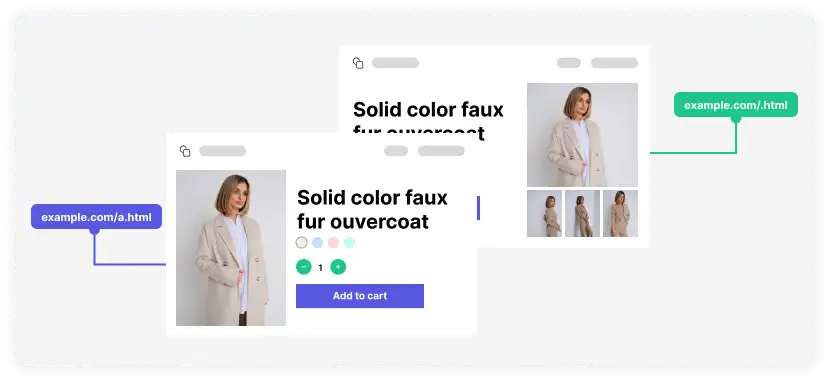

1. Split testing

In split testing, you test a completely new version of an existing web page to analyze which one performs better. You should use split testing when you want to test the entire design or copy of the existing landing page without touching the existing page.

2. Multivariate testing

Multivariate testing (MVT) is a form of A/B testing where variations of multiple page variables are simultaneously tested to analyze which combination performs the best out of all the possible permutations.

This method is more complex as it involves creating many variant pages and is best suited for advanced marketing, product, and development professionals.

3. Multi-page testing

In multi-page testing, you test changes to particular elements, say the CTA button, and across multiple pages, as shown in the image below:

Related guide: How To Use Heatmaps to Analyze Your Website Performance

What are the different page elements you can A/B test?

You can test several variables to optimize the landing page’s performance. Some of the crucial elements you can test are

Headline and subheadings

The headline is what grabs users’ attention, and subheadlines compel them to keep reading; click on your paid ad or scroll down to read below the fold copy. So, you should test your headline by creating different variations and see which works better.

Other than the copy, try changing the font styles, type, size, and alignment of the headline and subheadings.

Social proof

Social proof can be testimonials, case studies, expert reviews, awards, badges, and certificates that bolster the claim made by your headline or subheadline. It also helps build trust and credibility among website visitors. Different social proof appeals to different audiences.

You can use the same strategy by running an A/B test and see which one gets you more conversions. You can also try out putting social proof on different positions like above the fold, on signup forms, and above the footer and monitor the results.

Images and other visual elements

You can test out background images, above the fold image, or content images. Besides that, you can test by replacing images with GIFs, illustrations, or videos.

Navigation bar

You can also optimize users’ experience by A/B testing your site’s navigation bar. The navigation bar is how users move from one page to another, and so it should be clear and easy to understand and connect all the important pages.

You can test out the positioning of the navigation bar – horizontal or vertical or you can also test by changing the navigational fields.

Forms

Forms act as a medium through which users get in touch with you, so the signup process must be frictionless. You shouldn’t give users a chance to drop off during this stage. Notion uses this strategy effectively, which is why their signup form converts so well. Here’s what you can test:

Try reducing the unnecessary signup field.

Use social proof such as testimonials on the signup form page.

Add inline forms on different pages, such as case studies or blog pages.

Try out different form copy and CTA buttons.

Page’s design and layout

The way you present different elements on your page matters. Even though you’ve written a compelling landing page copy, a badly formatted copy won’t convert. So, you should test out the arrangement and placement of elements on your webpage and ensure a hierarchical order that users can easily follow.

Pop-ups

Pop-ups are great for capturing website visitors but will they work for you? That can be found using A/B testing. Test out different pop-ups, exit-intent pop-ups, content-related pop-ups, time or scroll-based pop-ups, etc.

Related guide: A Guide to Landing Page Optimization for Maximum Conversions

A/B testing process

The A/B test process consists of two major steps: Identifying the elements to test and then running the test.

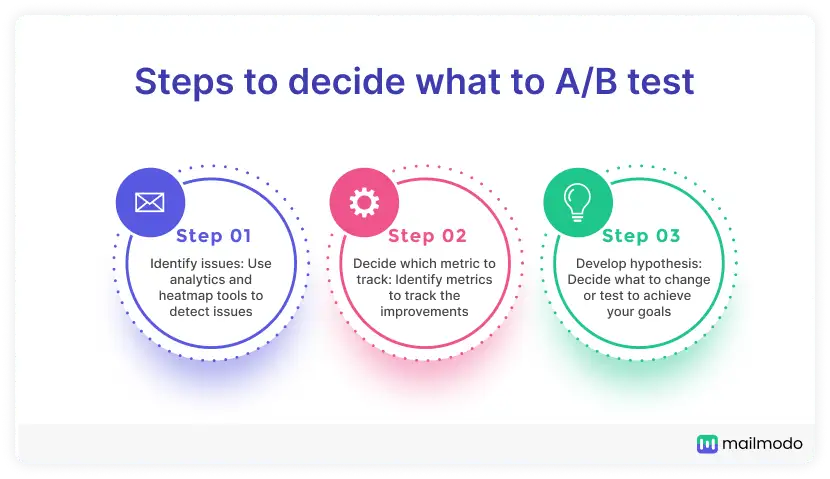

How to decide what to A/B test

Here is our 3 step approach to help you figure out what to A/B test:

Step 1: Identify issues

Before you can start testing, you need something to test.

Here are a few suggestions to get ideas for your A/B testing:

Ask your customer support and sales teams as they directly interact with the customers and give you accurate insights into what appeals to them and use that in your landing page.

Use surveys to get users’ feedback on your products and any issue or concern they might have. Address these issues in your page copy.

Analyze users’ on-site behavior and interaction using heatmap and marketing analytics tools to find where they click. Which element is the most visible to the user’s eye? And try giving them more of it.

Analyze your competitors’ site’s structure, how they talk to their customers, and which kind of social proof they use.

Step 2: Decide which metric to track

In this step, you need to identify the goals you want to achieve by deciding the improvement metric. The metric will be the one you use to draw the outcome of your A/B tests.

Step 3: Develop a hypothesis

Once you identify the goal, you need to develop hypotheses (backed up by data) about changes that will improve the metric. A hypothesis can be – adding social proof above the fold will increase the click-through rate by 5%

Once you follow all the three steps, your data might look like the table below:

Issues

What to track

Hypothesis

Users bouncing before scrolling below the fold

Click-through rate, bounce rate

Testing above the fold – title or subheading, image positioning, and adding social proof

Users click on the signup form but don’t finish it up

Click-through rate, form submission rate

Reducing the signup field, and testimonials on the signup page will enforce trust

Increase AOV, increase checkout, or sales

Click-through rate, conversion rate

Change homepage promotional imagery, navigation elements, checkout funnel elements

How to run the A/B test

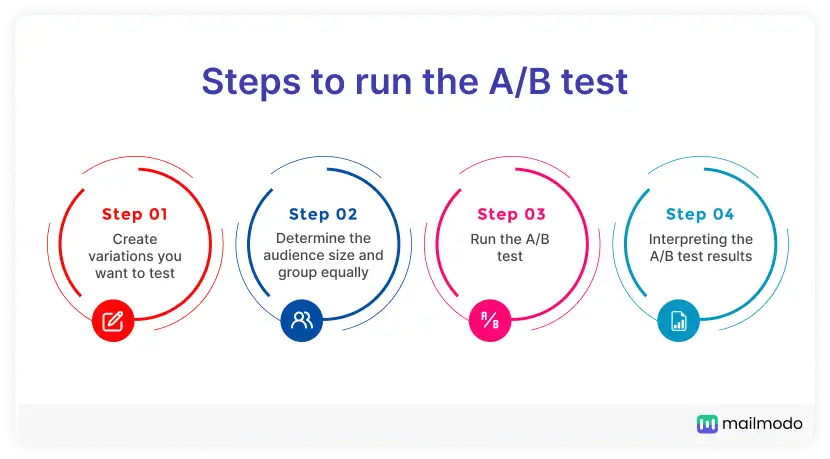

Here is our 4 step approach to running the A/B test successfully:

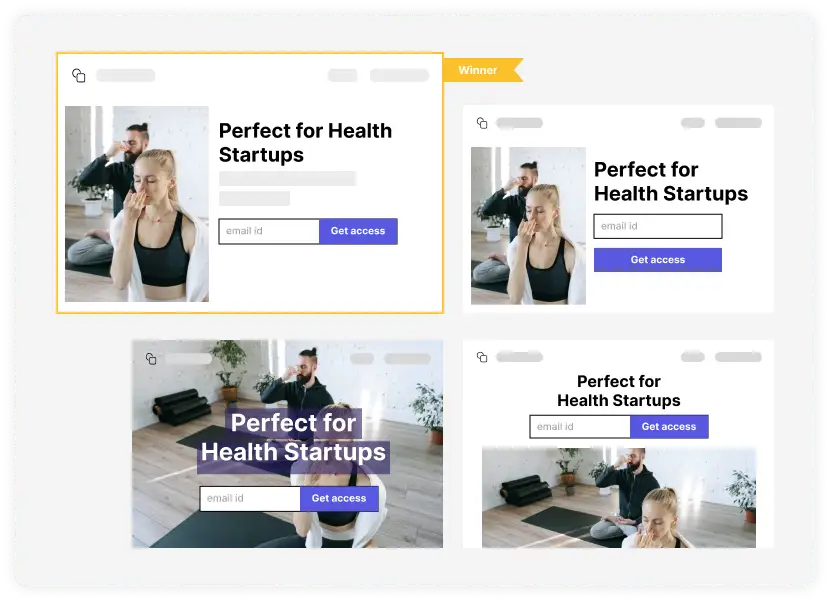

Step 1: Create variations

To understand this step, you need to know about these three terms:

Control: the original version of the page.

Test version: The version where you make the changes.

Variant: Any element you change for testing, such as changing the CTA button, using different images.

In this step, you will put the hypothesis into action. In addition, you’ll also decide which A/B testing approach you will use – split, multi-page, or multivariate testing. Then, create the test version of the page.

For instance, if you want to increase the click-through rate, you might want to change the CTA copy or button size. If you want to get more form submissions, then reducing the signup field or adding testimonials right beside the form can be a variation.

Step 2: Determine the audience size and group equally

To accurately determine the success of the A/B test, you need to choose the right user’s sample size that divides them equally. The number of groups will depend on the testing method you are using.

For example, in split testing, you need to have two different groups, while in multi-page testing, the number of groups depends on the number of test versions.

Furthermore, having the right sample size is crucial to validate the test results. For example as per Julian Shapiro,

To statistically validate a 6.3% or greater conversion increase, a test needs 1,000+ visits.

To statistically validate a 2%+ increase, a test needs 10,000+ visits.

Step 3: Run the A/B test

Once all is set up – the variations and sample size, you can run the test using Google Optimize, Crazyegg, and Ominiconvert. Then let the test run for an adequate time until you begin to interpret the results.

You might ask at this stage – how long should I run the A/B test for?

Typically, you should run a test until you have the statistically significant data before you make any changes. Besides, the timings depend on the variations, sample size, and test goals.

Google offers the following insights into this matter:

Keep an experiment running until at least one of these conditions has been met:

Two weeks have passed to account for cyclical variations in web traffic during the week.

At least one variant has a 95 percent probability of beating the baseline.

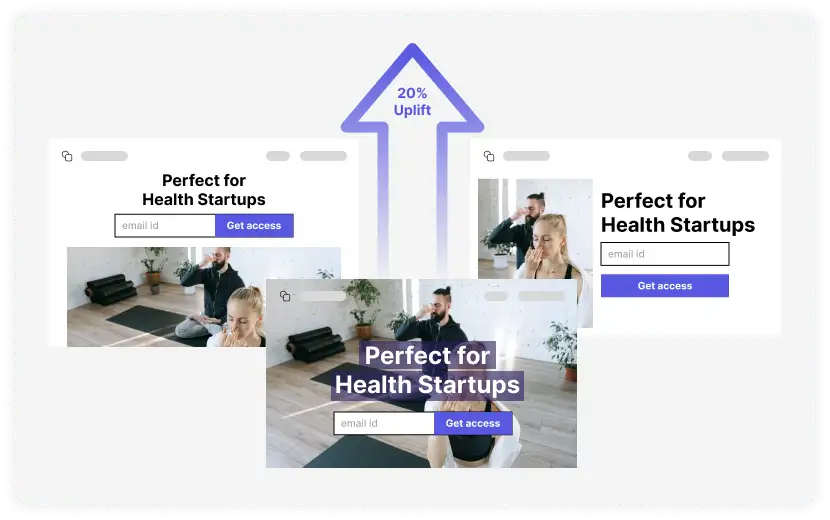

Step 4: Interpreting the A/B test results

Most commonly, you’ll be using A/B test tools to monitor the performance and analyze the data collected. That is the basic approach every marketer must be familiar with.

However, seeing improved metrics on one of the versions doesn’t mean you should implement the changes right away. As many factors influence the A/B test, you need to activate your judgment phase and implement the changes depending on whether they will bring better returns on investment or not.

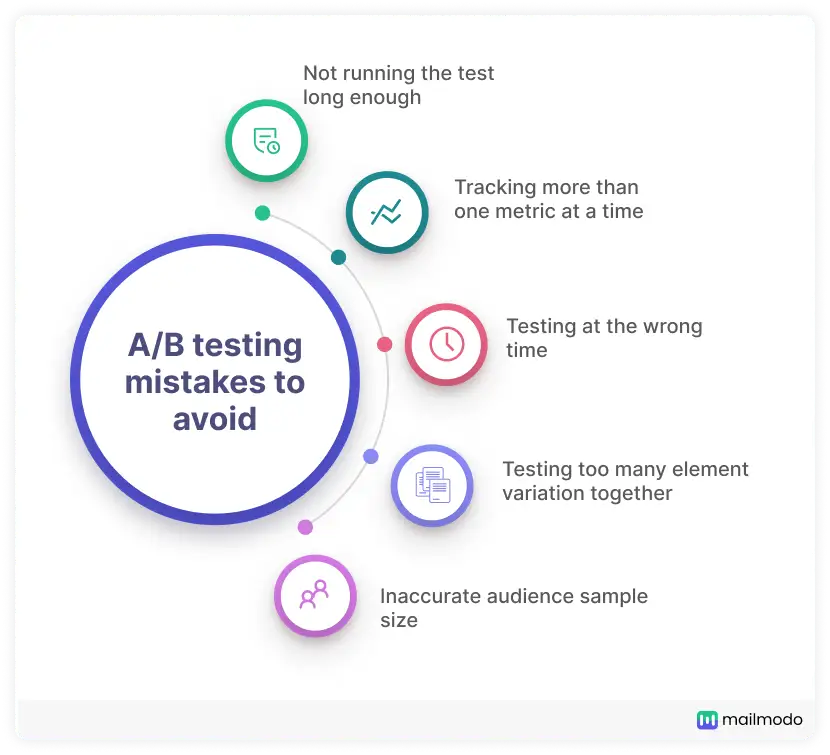

5 A/B testing mistakes to avoid

A/B testing may seem easy to conduct, but many things can jeopardize the test result. Here are the most common mistakes you should avoid:

1. Not running the test long enough

Many marketers don’t let the tests run their course. Inadequate time to assess the test performance had the probability of jeopardizing the accuracy of the result. This timing is influenced by sample size, variants, and goals.

You can use VWO’s A/B test significance calculator to determine whether you’ve achieved statistical significance for your A/B test.

Enter the number of visitors and conversions for both versions, and it will calculate the A/B test statistical significance. It also gives the P-value, a statistical value that helps you assess the reliability of the result.

2. Tracking more than one metric

If you’re looking at too many metrics (usually more than one), you risk making an unreliable or incorrect judgment.

3. Testing at the wrong time

If your site gets the most traffic during weekdays, then A/B testing on weekends will not give you statistically significant results. Besides, comparing tests during the holiday season with the slum season will give you the wrong result. In both the cases, you aren’t comparing like with the like. Thus, you should run tests during an apt time to get reliable results.

4. Testing too many elements together

Avoid testing more than one element at a time because it will be difficult to pinpoint the elements that influenced the test’s success or failure. Besides, the more elements you test at once, the bigger the sample size you’ll need to send more traffic to reach a rational judgment.

5. Inaccurate sample size

As crucial as running a test at an accurate time, the timings won’t give you the expected result if your sample size is low or too large. To get reliable results, you should split your audience right away. To avoid this mistake, you can use A/B testing tools like Optinmonster that automatically divides the traffic according to the number of tests you’re running.